3d Printing Safety - Fire detection relay

building a serverless analytics platform at lolscale

building a datawarehouse for testing

complex event processing to detect click fraud

complex event processing for fun and profit part deux

complex event processing for fun and profit

sample esp queries

Parsing SFTP logs with Cloudwatch log Insights

One-time password sharing... securely!

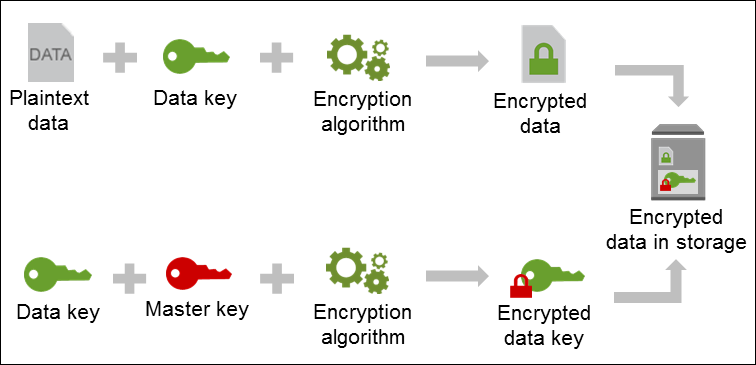

Envelope encryption in Lambda functions with DynamoDB and KMS

Serverless content security policy

Programatically associating Lambda@Edge with a CloudFront distribution

Serverless blog HOWTO

building a serverless analytics platform at lolscale

complex event processing to detect click fraud

complex event processing for fun and profit part deux

complex event processing for fun and profit

sample esp queries

Serverless content security policy

Invalidate CloudFront with Lambda

Programatically associating Lambda@Edge with a CloudFront distribution

puppet lessons learned

geo blocking with iptables/ipset

creating forensic images

compressing mysql binary logs

building secure linux systems

security policy templates

cloning systems

One-time password sharing... securely!

Envelope encryption in Lambda functions with DynamoDB and KMS

One-time password sharing... securely!

Envelope encryption in Lambda functions with DynamoDB and KMS

complex event processing to detect click fraud

complex event processing for fun and profit part deux

complex event processing for fun and profit

sample esp queries

One-time password sharing... securely!

Envelope encryption in Lambda functions with DynamoDB and KMS

Serverless content security policy

Invalidate CloudFront with Lambda

Serverless blog HOWTO

building a serverless analytics platform at lolscale

Serverless content security policy

Programatically associating Lambda@Edge with a CloudFront distribution

introducing cinched

puppet lessons learned

meshed vpn using tinc

geo blocking with iptables/ipset

creating forensic images

compressing mysql binary logs

building secure linux systems

security policy templates

security advice for the average joe

meshed vpn using tinc

geo blocking with iptables/ipset

tcpdump tip viewing a packet stream data payload

i see packets...

port scanning wihtout a port scanner

remote shell without any tools

validating ip addresses in php

Parsing SFTP logs with Cloudwatch log Insights

One-time password sharing... securely!

Envelope encryption in Lambda functions with DynamoDB and KMS

benchmarking cinched

introducing cinched

setting up a ca

security advice for the average joe

meshed vpn using tinc

geo blocking with iptables/ipset

creating forensic images

building secure linux systems

port scanning wihtout a port scanner

remote shell without any tools

security policy templates